Sat Mag

Brief history of plagues and pandemics

Uditha Devapriya

By the 14th century, trade routes between the East and West had made it easier for pandemics to spread, while conquests by the Spanish and the Portuguese in the 15th and 16th centuries would introduce several diseases to the New World. Trade and colonialism hence became, by the end of the Renaissance, the main causes of plague, which scientific advancement did little to combat, much less eliminate: a physician in the 17th century would have been as baffled or helpless as a physician in the 14th or 15th in the face of an outbreak.

No doubt rapid urbanisation and gentrification had a prominent say in the proliferation of such outbreaks, but among more relevant reasons would have been poor sanitary conditions, lack of communication and accessibility, and class stratifications which excluded the lower orders – the working class as well as peasants in the colonies – from a healthcare system that pandered to an elite minority. By 1805, the only hospitals built in Ceylon were those serving military garrisons in places like Colombo, Galle, and Trincomalee.

Among the more virulent epidemics, of course, was the notorious plague. Various studies have tried to chart the origins and the trajectory of the disease. There were two outbreaks in Rome: the Antonine Plague in 165 AD and the Justinian Plague in 541 AD. With a lack of proper inscriptional evidence, we must look at literary sources: the physician Galen for the Antonine, and Procopius and John of Ephesus for the Justinian.

Predating both these was an outbreak reported by the historian Thucydides in 430 BC Rome, but scholars have ascertained that this was less a plague than a smallpox contagion. In any case, by 541 AD plague had become a fact of life in the region, and not only in Pagan Rome; within the next few years, it had spread to the Arabic world, where scholars, physicians, and theologians tried to diagnose it. Commentaries from this period tell us of theologians tackling a religious crisis borne out of pestilence: in the beginning, Islamic theology had laid down a prohibition against Muslims “either entering or fleeing a plague-stricken land”, and yet by the time these epidemics ravaged their land, fleeing an epidemic was reinterpreted to mean acting in line with God’s wishes: “Whichever side you let loose your camels,” Umar I, the founder of the Umayyad Caliphate, told Abu Ubaidah, “it would be the will of God.” As with all such religious injunctions, this changed in the light of an urgent material need: the prevention of an outbreak. We see similar modifications in other religious texts as well.

Plagues and pandemics also feature in the Bible. One frequently referred to story is that of the Philistines, having taken away the Ark of the Covenant from the Israelites, being struck by a disease by God which “smote them with emerods” (1 Samuel 5:6). J. F. D. Shrewsbury noted down three clues for the identification of the illness: that it spread from an army in the field to a civilian population, that it involved the spread of emeroids in the “secret part” of the body, and that it compelled the making of “seats of skin.” The conventional wisdom for a long time had been that this was, as with 541 AD Rome, the outbreak of the plague, but Shrewsbury on the basis of the three clues ascertained that it was more plausibly a reference to an outbreak of haemorrhoids. On the other hand, the state of medicine being what it would have been in Philistine and Israel, lesions in the “secret part” (the anus) may have been construed as a sign of divine retribution in line with a pestilence: to a civilisation of prophets, even haemorrhoids and piles would have been comparable to plague sent from God.

Plagues and pandemics also feature in the Bible. One frequently referred to story is that of the Philistines, having taken away the Ark of the Covenant from the Israelites, being struck by a disease by God which “smote them with emerods” (1 Samuel 5:6). J. F. D. Shrewsbury noted down three clues for the identification of the illness: that it spread from an army in the field to a civilian population, that it involved the spread of emeroids in the “secret part” of the body, and that it compelled the making of “seats of skin.” The conventional wisdom for a long time had been that this was, as with 541 AD Rome, the outbreak of the plague, but Shrewsbury on the basis of the three clues ascertained that it was more plausibly a reference to an outbreak of haemorrhoids. On the other hand, the state of medicine being what it would have been in Philistine and Israel, lesions in the “secret part” (the anus) may have been construed as a sign of divine retribution in line with a pestilence: to a civilisation of prophets, even haemorrhoids and piles would have been comparable to plague sent from God.

Estimates for population loss from these pandemics are notoriously difficult to determine. On the one hand, being the only sources we have as of now, literary texts accurately record how civilians conducted their daily lives despite the pestilence, while on the other, writers of these texts resorted to occasional if not infrequent exaggeration to emphasise the magnitude of the disease. Both Procopius and John of Ephesus are agreed on the point, for instance, that the Justinian Plague was preceded by hallucinations, which then spread to fever, languor, and on the second or third day to bubonic swelling “in the groin or armpit, beside the ears or on the thighs.” However, there is another account, by Evagrius Scholasticus, whose record of the outbreak in his hometown Antioch was informed by a personal experience with a disease he contracted as a schoolboy and to which he later lost a wife, children, grandchildren, servants and, presumably, friends. It has been pointed out that this may have injected a subjective bias to his account, but at the same time, given that Procopius and John followed a model of the plague narrative laid down by Thucydides centuries before, we can consider Evagrius’s as a more original if not more accurate record, despite prejudices typical of writers of his time: for instance, his (unfounded) claim that the plague originated in Ethiopia.

Much water has flowed through the debate over where the plague originated. A study in 2010 concluded that the bacterium Yersinia pestis evolved in, or near, China. Historical evidence marshalled for this theory points at the fact that by the time of the Justinian plague the Roman government had solidified links with China over the trade of silk. Popular historians contend that the Silk Road, and the Zheng He expeditions, may have spread the contagion through the Middle East to southern Europe, a line of thinking even the French historian Fernand Braudel subscribed to in his work on the history of the Mediterranean. However, as Ole Benedictow in his response to the 2010 study points out, “references to bubonic plague in Chinese sources are both late and sparse”, a criticism made earlier, in 1977, by John Norris, who observed that it is likely that literary references to the Chinese origin of the plague were informed by ethnic and racial prejudices; a similar animus prevailed among the early Western chroniclers against what they perceived as the “moral laxity” of non-believers.

A more plausible thesis is that the bacterium had its origins around 5,000 or 6,000 years ago during the Neolithic era. A study conducted two years ago (Rascovan 2019) posits an original theory: that the genome for Yersinia pestis emerged as the first discovered and documented case of plague 4,900 years ago in Sweden, “potentially contributing” to the Neolithic decline the reasons for which “are still largely debated.” However, like the 2010 study this too has its pitfalls, among them a lack of the sort of literary sources which, however biased they may be, we have for the Chinese genesis thesis. It is clear, nevertheless, that the plague was never at home in a specific territory, and that despite the length and breadth of the Silk Road it could not have made inroads to Europe through the Mongol steppes. To contend otherwise is to not only rebel against geography, but also ignore pandemics the origins of which were limited to neither East and Central Asia nor the Middle East.

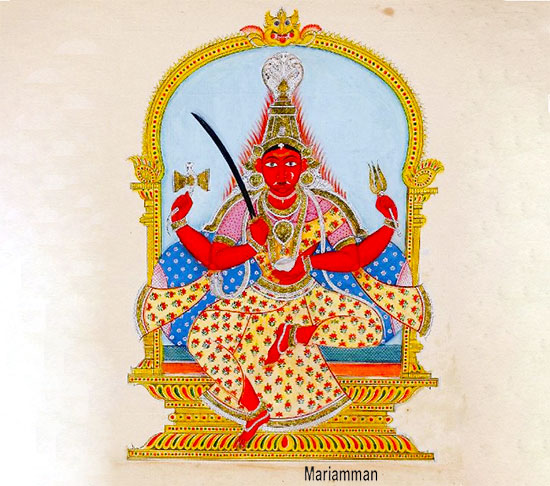

Such outbreaks, moreover, were not unheard of in the Indian subcontinent, even if we do not have enough evidence for when, where, and how they occurred. The cult of Mariammam in Tamil Nadu, for instance, points at cholera as well as smallpox epidemics in the region, given that she is venerated for both. “In India, a cholera-like diarrheal disease known as Visucika was prevalent from the time of the Susruta“, an Indian medicinal tract that has the following passage the illness to which reference is made seems to be the plague:

Kakshabhageshu je sfota ayante mansadarunah

Antardaha jwarkara diptapapakasannivas

Saptahadwa dasahadwa pakshadwa ghnonti manavam

Tamagnirohinim vidyat asadyam sannipatatas

Or in English, “Deep, hard swellings appear in the armpit, giving rise to violent fever, like a burning fire, and a burning, swelling sensation inside. It kills the patient within seven, 10, or 15 days. It is called Agnirohini. It is due to sannipata or a deranged condition of all the three humours, vata, pitta, and kapha, and is incurable.”

The symptoms no doubt point at plague, even if we can’t immediately jump to such a conclusion. The reference to a week or 15 days is indicative of modern bubonic plague, while the burning sensation and violent fever shows an illness that rapidly terminates in death. The Susruta Samhita, from which this reference is taken, was written in the ninth century AD. We do not have a similar tract in Sri Lanka from that time, but the Mahavamsa tells us that in the third century AD, during the reign of Sirisangabo, there was an outbreak of a disease the symptoms of which included the reddening of the eyes. Mahanama thera, no doubt attributing it to the wrath of divine entities, personified the pandemic in a yakinni called Rattakkhi (or Red Eye). Very possibly the illness was a cholera epidemic, or even the plague.

China, India, and Medieval Europe aside, the second major wave of pandemics came about a while after the Middle Ages and Black Death, and during the Renaissance, when conquerors from Spain and Portugal, having divided the world between the two countries, introduced and spread diseases to which they had become immune among the natives of the lands they sailed to. Debates over the extent to which Old World civilisations were destroyed and decimated by these diseases continue to rage. The first attempts to determine pre-colonial populations in the New World were made in the early part of the 20th century. The physiologist S. F. Cook published his research on the intrusions of diseases from the Old World to the Americas from 1937. In 1966, the anthropologist Henry F. Dobyns argued that most studies understated the numbers. In the 1930s when research on the topic began, conservative estimates put the North American pre-Columbine population at one million. Dobyns upped it to 10 million and, later, 18 million; most of them, he concluded, were wiped out by the epidemics.

And it didn’t stop at that. These were followed by outbreaks of diseases associated with the “white man”, including yaws and cholera. Between 1817 and 1917, for instance, no fewer than six cholera epidemics devastated the subcontinent. Medical authorities were slow to act, even in Ceylon, for the simple reason that by the time of the British conquest, filtration theory in the colonies had deemed it prudent that health, as with education, be catered to a minority. Doctors thus did not find their way to far flung places suffering the most from cholera, while epidemics were fanned even more by the influx of South Indian plantation workers after the 1830s. Not until the 1930s could authorities respond properly to the pandemic; by then, the whole of the conquered world, from Asia all the way to Africa, had turned into a beleaguered and diseased patient, not unlike Europe in the 14th century.

The writer can be reached at udakdev1@gmail.com

Sat Mag

October 13 at the Women’s T20 World Cup: Injury concerns for Australia ahead of blockbuster game vs India

Australia vs India

Sharjah, 6pm local time

Australia have major injury concerns heading into the crucial clash. Just four balls into the match against Pakistan, Tayla Vlaeminck was out with a right shoulder dislocation. To make things worse, captain Alyssa Healy suffered an acute right foot injury while batting on 37 as she hobbled off the field with Australia needing 14 runs to win. Both players went for scans on Saturday.

India captain Harmanpreet Kaur who had hurt her neck in the match against Pakistan, turned up with a pain-relief patch on the right side of her neck during the Sri Lanka match. She also didn’t take the field during the chase. Fast bowler Pooja Vastrakar bowled full-tilt before the Sri Lanka game but didn’t play.

India will want a big win against Australia. If they win by more than 61 runs, they will move ahead of Australia, thereby automatically qualifying for the semi-final. In a case where India win by fewer than 60 runs, they will hope New Zealand win by a very small margin against Pakistan on Monday. For instance, if India make 150 against Australia and win by exactly 10 runs, New Zealand need to beat Pakistan by 28 runs defending 150 to go ahead of India’s NRR. If India lose to Australia by more than 17 runs while chasing a target of 151, then New Zealand’s NRR will be ahead of India, even if Pakistan beat New Zealand by just 1 run while defending 150.

Overall, India have won just eight out of 34 T20Is they’ve played against Australia. Two of those wins came in the group-stage games of previous T20 World Cups, in 2018 and 2020.

Australia squad:

Alyssa Healy (capt & wk), Darcie Brown, Ashleigh Gardner, Kim Garth, Grace Harris, Alana King, Phoebe Litchfield, Tahlia McGrath, Sophie Molineux, Beth Mooney, Ellyse Perry, Megan Schutt, Annabel Sutherland, Tayla Vlaeminck, Georgia Wareham

India squad:

Harmanpreet Kaur (capt), Smriti Mandhana (vice-capt), Yastika Bhatia (wk), Shafali Verma, Deepti Sharma, Jemimah Rodrigues, Richa Ghosh (wk), Pooja Vastrakar, Arundhati Reddy, Renuka Singh, D Hemalatha, Asha Sobhana, Radha Yadav, Shreyanka Patil, S Sajana

Tournament form guide:

Australia have three wins in three matches and are coming into this contest having comprehensively beaten Pakistan. With that win, they also all but sealed a semi-final spot thanks to their net run rate of 2.786. India have two wins in three games. In their previous match, they posted the highest total of the tournament so far – 172 for 3 and in return bundled Sri Lanka out for 90 to post their biggest win by runs at the T20 World Cup.

Players to watch:

Two of their best batters finding their form bodes well for India heading into the big game. Harmanpreet and Mandhana’s collaborative effort against Pakistan boosted India’s NRR with the semi-final race heating up. Mandhana, after a cautious start to her innings, changed gears and took on Sri Lanka’s spinners to make 50 off 38 balls. Harmanpreet, continuing from where she’d left against Pakistan, played a classic, hitting eight fours and a six on her way to a 27-ball 52. It was just what India needed to reinvigorate their T20 World Cup campaign.

[Cricinfo]

Sat Mag

Living building challenge

By Eng. Thushara Dissanayake

The primitive man lived in caves to get shelter from the weather. With the progression of human civilization, people wanted more sophisticated buildings to fulfill many other needs and were able to accomplish them with the help of advanced technologies. Security, privacy, storage, and living with comfort are the common requirements people expect today from residential buildings. In addition, different types of buildings are designed and constructed as public, commercial, industrial, and even cultural or religious with many advanced features and facilities to suit different requirements.

We are facing many environmental challenges today. The most severe of those is global warming which results in many negative impacts, like floods, droughts, strong winds, heatwaves, and sea level rise due to the melting of glaciers. We are experiencing many of those in addition to some local issues like environmental pollution. According to estimates buildings account for nearly 40% of all greenhouse gas emissions. In light of these issues, we have two options; we change or wait till the change comes to us. Waiting till the change come to us means that we do not care about our environment and as a result we would have to face disastrous consequences. Then how can we change in terms of building construction?

Before the green concept and green building practices come into play majority of buildings in Sri Lanka were designed and constructed just focusing on their intended functional requirements. Hence, it was much likely that the whole process of design, construction, and operation could have gone against nature unless done following specific regulations that would minimize negative environmental effects.

We can no longer proceed with the way we design our buildings which consumes a huge amount of material and non-renewable energy. We are very concerned about the food we eat and the things we consume. But we are not worrying about what is a building made of. If buildings are to become a part of our environment we have to design, build and operate them based on the same principles that govern the natural world. Eventually, it is not about the existence of the buildings, it is about us. In other words, our buildings should be a part of our natural environment.

The living building challenge is a remarkable design philosophy developed by American architect Jason F. McLennan the founder of the International Living Future Institute (ILFI). The International Living Future Institute is an environmental NGO committed to catalyzing the transformation toward communities that are socially just, culturally rich, and ecologically restorative. Accordingly, a living building must meet seven strict requirements, rather certifications, which are called the seven “petals” of the living building. They are Place, Water, Energy, Equity, Materials, Beauty, and Health & Happiness. Presently there are about 390 projects around the world that are being implemented according to Living Building certification guidelines. Let us see what these seven petals are.

Place

This is mainly about using the location wisely. Ample space is allocated to grow food. The location is easily accessible for pedestrians and those who use bicycles. The building maintains a healthy relationship with nature. The objective is to move away from commercial developments to eco-friendly developments where people can interact with nature.

Water

It is recommended to use potable water wisely, and manage stormwater and drainage. Hence, all the water needs are captured from precipitation or within the same system, where grey and black waters are purified on-site and reused.

Energy

Living buildings are energy efficient and produce renewable energy. They operate in a pollution-free manner without carbon emissions. They rely only on solar energy or any other renewable energy and hence there will be no energy bills.

Equity

What if a building can adhere to social values like equity and inclusiveness benefiting a wider community? Yes indeed, living buildings serve that end as well. The property blocks neither fresh air nor sunlight to other adjacent properties. In addition, the building does not block any natural water path and emits nothing harmful to its neighbors. On the human scale, the equity petal recognizes that developments should foster an equitable community regardless of an individual’s background, age, class, race, gender, or sexual orientation.

Materials

Materials are used without harming their sustainability. They are non-toxic and waste is minimized during the construction process. The hazardous materials traditionally used in building components like asbestos, PVC, cadmium, lead, mercury, and many others are avoided. In general, the living buildings will not consist of materials that could negatively impact human or ecological health.

Beauty

Our physical environments are not that friendly to us and sometimes seem to be inhumane. In contrast, a living building is biophilic (inspired by nature) with aesthetical designs that beautify the surrounding neighborhood. The beauty of nature is used to motivate people to protect and care for our environment by connecting people and nature.

Health & Happiness

The building has a good indoor and outdoor connection. It promotes the occupants’ physical and psychological health while causing no harm to the health issues of its neighbors. It consists of inviting stairways and is equipped with operable windows that provide ample natural daylight and ventilation. Indoor air quality is maintained at a satisfactory level and kitchen, bathrooms, and janitorial areas are provided with exhaust systems. Further, mechanisms placed in entrances prevent any materials carried inside from shoes.

The Bullitt Center building

Bullitt Center located in the middle of Seattle in the USA, is renowned as the world’s greenest commercial building and the first office building to earn Living Building certification. It is a six-story building with an area of 50,000 square feet. The area existed as a forest before the city was built. Hence, the Bullitt Center building has been designed to mimic the functions of a forest.

The energy needs of the building are purely powered by the solar system on the rooftop. Even though Seattle is relatively a cloudy city the Bullitt Center has been able to produce more energy than it needed becoming one of the “net positive” solar energy buildings in the world. The important point is that if a building is energy efficient only the area of the roof is sufficient to generate solar power to meet its energy requirement.

It is equipped with an automated window system that is able to control the inside temperature according to external weather conditions. In addition, a geothermal heat exchange system is available as the source of heating and cooling for the building. Heat pumps convey heat stored in the ground to warm the building in the winter. Similarly, heat from the building is conveyed into the ground during the summer.

The potable water needs of the building are achieved by treating rainwater. The grey water produced from the building is treated and re-used to feed rooftop gardens on the third floor. The black water doesn’t need a sewer connection as it is treated to a desirable level and sent to a nearby wetland while human biosolid is diverted to a composting system. Further, nearly two third of the rainwater collected from the roof is fed into the groundwater and the process resembles the hydrologic function of a forest.

It is encouraging to see that most of our large-scale buildings are designed and constructed incorporating green building concepts, which are mainly based on environmental sustainability. The living building challenge can be considered an extension of the green building concept. Amanda Sturgeon, the former CEO of the ILFI, has this to say in this regard. “Before we start a project trying to cram in every sustainable solution, why not take a step outside and just ask the question; what would nature do”?

Sat Mag

Something of a revolution: The LSSP’s “Great Betrayal” in retrospect

By Uditha Devapriya

On June 7, 1964, the Central Committee of the Lanka Sama Samaja Party convened a special conference at which three resolutions were presented. The first, moved by N. M. Perera, called for a coalition with the SLFP, inclusive of any ministerial portfolios. The second, led by the likes of Colvin R. de Silva, Leslie Goonewardena, and Bernard Soysa, advocated a line of critical support for the SLFP, but without entering into a coalition. The third, supported by the likes of Edmund Samarakkody and Bala Tampoe, rejected any form of compromise with the SLFP and argued that the LSSP should remain an independent party.

The conference was held a year after three parties – the LSSP, the Communist Party, and Philip Gunawardena’s Mahajana Eksath Peramuna – had founded a United Left Front. The ULF’s formation came in the wake of a spate of strikes against the Sirimavo Bandaranaike government. The previous year, the Ceylon Transport Board had waged a 17-day strike, and the harbour unions a 60-day strike. In 1963 a group of working-class organisations, calling itself the Joint Committee of Trade Unions, began mobilising itself. It soon came up with a common programme, and presented a list of 21 radical demands.

In response to these demands, Bandaranaike eventually supported a coalition arrangement with the left. In this she was opposed, not merely by the right-wing of her party, led by C. P. de Silva, but also those in left parties opposed to such an agreement, including Bala Tampoe and Edmund Samarakkody. Until then these parties had never seen the SLFP as a force to reckon with: Leslie Goonewardena, for instance, had characterised it as “a Centre Party with a programme of moderate reforms”, while Colvin R. de Silva had described it as “capitalist”, no different to the UNP and by default as bourgeois as the latter.

The LSSP’s decision to partner with the government had a great deal to do with its changing opinions about the SLFP. This, in turn, was influenced by developments abroad. In 1944, the Fourth International, which the LSSP had affiliated itself with in 1940 following its split with the Stalinist faction, appointed Michel Pablo as its International Secretary. After the end of the war, Pablo oversaw a shift in the Fourth International’s attitude to the Soviet states in Eastern Europe. More controversially, he began advocating a strategy of cooperation with mass organisations, regardless of their working-class or radical credentials.

Pablo argued that from an objective perspective, tensions between the US and the Soviet Union would lead to a “global civil war”, in which the Soviet Union would serve as a midwife for world socialist revolution. In such a situation the Fourth International would have to take sides. Here he advocated a strategy of entryism vis-à-vis Stalinist parties: since the conflict was between Stalinist and capitalist regimes, he reasoned, it made sense to see the former as allies. Such a strategy would, in his opinion, lead to “integration” into a mass movement, enabling the latter to rise to the level of a revolutionary movement.

Though controversial, Pablo’s line is best seen in the context of his times. The resurgence of capitalism after the war, and the boom in commodity prices, had a profound impact on the course of socialist politics in the Third World. The stunted nature of the bourgeoisie in these societies had forced left parties to look for alternatives. For a while, Trotsky had been their guide: in colonial and semi-colonial societies, he had noted, only the working class could be expected to see through a revolution. This entailed the establishment of workers’ states, but only those arising from a proletarian revolution: a proposition which, logically, excluded any compromise with non-radical “alternatives” to the bourgeoisie.

To be sure, the Pabloites did not waver in their support for workers’ states. However, they questioned whether such states could arise only from a proletarian revolution. For obvious reasons, their reasoning had great relevance for Trotskyite parties in the Third World. The LSSP’s response to them showed this well: while rejecting any alliance with Stalinist parties, the LSSP sympathised with the Pabloites’ advocacy of entryism, which involved a strategic orientation towards “reformist politics.” For the world’s oldest Trotskyite party, then going through a series of convulsions, ruptures, and splits, the prospect of entering the reformist path without abandoning its radical roots proved to be welcoming.

Writing in the left-wing journal Community in 1962, Hector Abhayavardhana noted some of the key concerns that the party had tried to resolve upon its formation. Abhayavardhana traced the LSSP’s origins to three developments: international communism, the freedom struggle in India, and local imperatives. The latter had dictated the LSSP’s manifesto in 1936, which included such demands as free school books and the use of Sinhala and Tamil in the law courts. Abhayavardhana suggested, correctly, that once these imperatives changed, so would the party’s focus, though within a revolutionary framework. These changes would be contingent on two important factors: the establishment of universal franchise in 1931, and the transfer of power to the local bourgeoisie in 1948.

Paradoxical as it may seem, the LSSP had entered the arena of radical politics through the ballot box. While leading the struggle outside parliament, it waged a struggle inside it also. This dual strategy collapsed when the colonial government proscribed the party and the D. S. Senanayake government disenfranchised plantation Tamils. Suffering two defeats in a row, the LSSP was forced to think of alternatives. That meant rethinking categories such as class, and grounding them in the concrete realities of the country.

This was more or less informed by the irrelevance of classical and orthodox Marxian analysis to the situation in Sri Lanka, specifically to its rural society: with a “vast amorphous mass of village inhabitants”, Abhayavardhana observed, there was no real basis in the country for a struggle “between rich owners and the rural poor.” To complicate matters further, reforms like the franchise and free education, which had aimed at the emancipation of the poor, had in fact driven them away from “revolutionary inclinations.” The result was the flowering of a powerful rural middle-class, which the LSSP, to its discomfort, found it could not mobilise as much as it had the urban workers and plantation Tamils.

Where else could the left turn to? The obvious answer was the rural peasantry. But the rural peasantry was in itself incapable of revolution, as Hector Abhayavardhana has noted only too clearly. While opposing the UNP’s Westernised veneer, it did not necessarily oppose the UNP’s overtures to Sinhalese nationalism. As historians like K. M. de Silva have observed, the leaders of the UNP did not see their Westernised ethos as an impediment to obtaining support from the rural masses. That, in part at least, was what motivated the Senanayake government to deprive Indian estate workers of their most fundamental rights, despite the existence of pro-minority legal safeguards in the Soulbury Constitution.

To say this is not to overlook the unique character of the Sri Lankan rural peasantry and petty bourgeoisie. Orthodox Marxists, not unjustifiably, characterise the latter as socially and politically conservative, tilting more often than not to the right. In Sri Lanka, this has frequently been the case: they voted for the UNP in 1948 and 1952, and voted en masse against the SLFP in 1977. Yet during these years they also tilted to the left, if not the centre-left: it was the petty bourgeoisie, after all, which rallied around the SLFP, and supported its more important reforms, such as the nationalisation of transport services.

One must, of course, be wary of pasting the radical tag on these measures and the classes that ostensibly stood for them. But if the Trotskyite critique of the bourgeoisie – that they were incapable of reform, even less revolution – holds valid, which it does, then the left in the former colonies of the Third World had no alternative but to look elsewhere and to be, as Abhayavardhana noted, “practical men” with regard to electoral politics. The limits within which they had to work in Sri Lanka meant that, in the face of changing dynamics, especially among the country’s middle-classes, they had to change their tactics too.

Meanwhile, in 1953, the Trotskyite critique of Pabloism culminated with the publication of an Open Letter by James Cannon, of the US Socialist Workers’ Party. Cannon criticised the Pabloite line, arguing that it advocated a policy of “complete submission.” The publication of the letter led to the withdrawal of the International Committee of the Fourth International from the International Secretariat. The latter, led by Pablo, continued to influence socialist parties in the Third World, advocating temporary alliances with petty bourgeois and centrist formations in the guise of opposing capitalist governments.

For the LSSP, this was a much-needed opening. Even as late as 1954, three years after S. W. R. D. Bandaranaike formed the SLFP, the LSSP continued to characterise the latter as the alternative bourgeois party in Ceylon. Yet this did not deter it from striking up no contest pacts with Bandaranaike at the 1956 election, a strategy that went back to November 1951, when the party requested the SLFP to hold a discussion about the possibility of eliminating contests in the following year’s elections. Though it extended critical support to the MEP government in 1956, the LSSP opposed the latter once it enacted emergency measures in 1957, mobilising trade union action for a period of three years.

At the 1960 election the LSSP contested separately, with the slogan “N. M. for P.M.” Though Sinhala nationalism no longer held sway as it had in 1956, the LSSP found itself reduced to a paltry 10 seats. It was against this backdrop that it began rethinking its strategy vis-à-vis the ruling party. At the throne speech in April 1960, Perera openly declared that his party would not stabilise the SLFP. But a month later, in May, he called a special conference, where he moved a resolution for a coalition with the party. As T. Perera has noted in his biography of Edmund Samarakkody, the response to the resolution unearthed two tendencies within the oppositionist camp: the “hardliners” who opposed any compromise with the SLFP, including Samarakkody, and the “waverers”, including Leslie Goonewardena.

These tendencies expressed themselves more clearly at the 1964 conference. While the first resolution by Perera called for a complete coalition, inclusive of Ministries, and the second rejected a coalition while extending critical support, the third rejected both tactics. The outcome of the conference showed which way these tendencies had blown since they first manifested four years earlier: Perera’s resolution obtained more than 500 votes, the second 75 votes, the third 25. What the anti-coalitionists saw as the “Great Betrayal” of the LSSP began here: in a volte-face from its earlier position, the LSSP now held the SLFP as a party of a radical petty bourgeoisie, capable of reform.

History has not been kind to the LSSP’s decision. From 1970 to 1977, a period of less than a decade, these strategies enabled it, as well as the Communist Party, to obtain a number of Ministries, as partners of a petty bourgeois establishment. This arrangement collapsed the moment the SLFP turned to the right and expelled the left from its ranks in 1975, in a move which culminated with the SLFP’s own dissolution two years later.

As the likes of Samarakkody and Meryl Fernando have noted, the SLFP needed the LSSP and Communist Party, rather than the other way around. In the face of mass protests and strikes in 1962, the SLFP had been on the verge of complete collapse. The anti-coalitionists in the LSSP, having established themselves as the LSSP-R, contended later on that the LSSP could have made use of this opportunity to topple the government.

Whether or not the LSSP could have done this, one can’t really tell. However, regardless of what the LSSP chose to do, it must be pointed out that these decades saw the formation of several regimes in the Third World which posed as alternatives to Stalinism and capitalism. Moreover, the LSSP’s decision enabled it to see through certain important reforms. These included Workers’ Councils. Critics of these measures can point out, as they have, that they could have been implemented by any other regime. But they weren’t. And therein lies the rub: for all its failings, and for a brief period at least, the LSSP-CP-SLFP coalition which won elections in 1970 saw through something of a revolution in the country.

The writer is an international relations analyst, researcher, and columnist based in Sri Lanka who can be reached at udakdev1@gmail.com

-

Editorial1 day ago

Editorial1 day agoIllusory rule of law

-

News2 days ago

News2 days agoUNDP’s assessment confirms widespread economic fallout from Cyclone Ditwah

-

Business4 days ago

Business4 days agoKoaloo.Fi and Stredge forge strategic partnership to offer businesses sustainable supply chain solutions

-

Editorial2 days ago

Editorial2 days agoCrime and cops

-

Features1 day ago

Features1 day agoDaydreams on a winter’s day

-

Editorial3 days ago

Editorial3 days agoThe Chakka Clash

-

Business4 days ago

Business4 days agoSLT MOBITEL and Fintelex empower farmers with the launch of Yaya Agro App

-

Features1 day ago

Features1 day agoSurprise move of both the Minister and myself from Agriculture to Education